Containerization is an important part of software development. It helps to create an isolated and stable environment for our codes to run in. Docker is the most popular containerization software out there. This is why "dockerizing" an app is synonymous with containerising it in today's tech lingo. You'd find understanding Docker as an important skill in many job postings. It is that important.

It started with my #DevtoberFest post on LinkedIn. I requested links to any project with a GitHub repository requiring automated tests and CI/CD pipeline. A connection of my shared a link to an issue on the FlaskCon repository. I was psyched. I opened the link and found out that I only had to dockerize the app. HaHaHa. Basic Dockerfile stuff right? Or so I thought. One of the maintainers and the one that opened the issue was kind enough to point me towards docker-compose as a means to solving the issue. Unfortunately, there was a blind spot in my understanding of Docker. Trying to dockerize an application that I didn't write was about to expose those blind spots and help me get better.

Going into the PR, I made up my mind not to change anything in the app. My PR would contain only Docker-based files and a documentation update. I started with a basic Dockerfile. Based on the image in the alpine version of python 3.7. I built my docker image in GitHub codespaces to save data and for speed. So far, it was going well. Then, the problems started showing their beautiful heads.

Problem 1 - Passing environment variables to an image.

I read through the README file of the application. There were several things to be done. The instructions were straightforward but ambiguous at several points. An example is that I had no idea how to start the server or pass the SQL Database URI to the application. Many of my (NodeJS) projects would depend on an environment variable to connect to a database. I would supply a .env file while running the docker container. It didn't seem like that would work in this case. It was explicitly stated in the docs to create a directory that contains a config.py file. The config.py file should contain the SQL database URI. I did the natural thing that came to mind. Ask the maintainer and Google it.

The maintainer shared links to the way Flask handles its environment variables and the instance folder. Googling led me to articles about volume mounting on docker containers. In essence, I could mount a local directory as a directory on the docker container. The plan was to mount a file containing the URI to instance/config.py. I was making progress, or so I thought. I temporarily placed into the docker image the URI of a temporary SQL database. I needed to be sure it was working before I moved on. Then, the next problem struck.

Problem 2 - Ambiguity of application documentation.

How do I start this application? I haven't used Flask before. One would have thought it was more in the line of running python app.py(or flask run) as I found on Google. It wasn't. And it wasn't in the docs or so I thought. While it wasn't explicitly stated in the docs (another opportunity for a PR), I didn't attempt to run the server myself. Who does that? Me.

I started to follow the steps in the README and was able to get an environment up. The server started with python manage.py runderberg. I googled "runderberg", nothing came back (I thought I would learn a new German word). That fixed the second issue. Ready to move to the third.

Problem 3 - Unable to run multiple processes in a container.

The documentation stated that NodeJS is needed to set up a MailDev environment. Wait a minute! Doesn't that mean that I needed to install NodeJS along with python in the container? I began to do that. It was hard. It was the wrong thing to do and I wasted time.

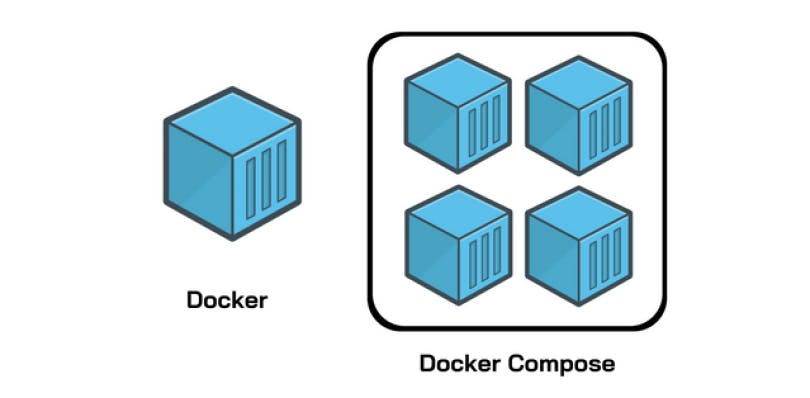

The problem was I saw a docker image as an abstraction of an isolated Linux operating system. One that I could run anything or any process I wanted. What I didn't know was that a docker image can only run one process at a time. It meant that I couldn't run mail-dev as well as the Flask app at the same time in one image. I asked my DevOps mentor (David Alpert) about it. He explained many things. The most important being the importance of Docker Compose to solve problems like this.

Compose is a tool for defining and running multi-container Docker applications. With Compose, you use a YAML file to configure your application’s services. Then, with a single command, you create and start all the services from your configuration. A service can contain several containers. In my case, that would be the SQL database, the flask application and the MailDev environment. Compose would create a network over which the containers can communicate. Compose not only solve my multiple process problem. It also removed the need to mount the SQL database URI file as a volume to the container. I was able to use volume mounting to persist the data in the SQL database. It was interesting to see knowledge coming together.

Problem 4 - Long image build time.

I noticed that it took a long time for my images to build. I was using GitHub codespaces because I didn't want to incur data charges I would get by using my local machine. It was also because the server is considerably faster than my laptop. Having a build time of almost three minutes defeats the purpose of all that I listed above. Verily, something must be wrong.

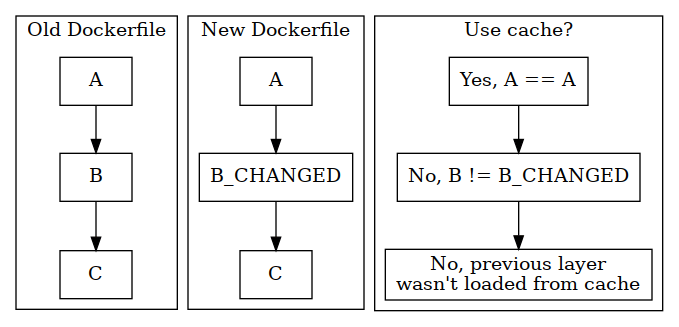

It was during my discussion with David that I learnt about the caching feature of Docker. Docker can cache layers during builds and reuse them if the layers don't change. If a Dockerfile image build has six steps and the fourth step/layer is changed, the first three layers are reused during an image build. The fourth, fifth and sixth layers are rebuilt. This saves bandwidth and computational resources. I had to rearrange the commands in the Dockerfile to take advantage of the caching ability of docker. The first part of the Dockerfile contained the things that were least likely to change such as the base image and dependency updates. Then came the pip installations. Then the database migrations. My build time was reduced to around 30 seconds. A great improvement but I still wouldn't use my local machine. Internet plans are quite expensive in Nigeria.

Final words

I am not done with the containerization but have learnt a lot on the way. This includes

- Reading of documentation before getting started on an issue.

- Asking questions when you are stuck.

- Caching of image layers.

- Volume mounting to preserve data in SQL database.

- Using Compose to run multi-container Docker applications.

The ongoing draft Pull Request can be found on Github. I will keep you updated. Thank you for reading.